Hello and welcome to my Zero to Hero series to help you go from knowing nothing, to hopefully knowing something about using containers and orchestrating them in production environments. I'm Mike and, with luck, over the coming posts I'll be able to impart some of the knowledge I've acquired whilst being a Technical Director and Founder at startups and SMEs in an easy to digest way. If you want to connect, feel free to reach out to me on Twitter @MikeDanielDev.

Just a heads up, this is not going to be a step by step tutorial. There are a million and one different tutorials for docker out there. This series will give you some technical help and I'll definitely make sure it points you in the right direction when it doesn't, but really it's about learning the context to business/development that so many tutorials leave out. I personally have found this to be the most difficult thing for developers and stakeholders to get. It's all good and well forcing yourself to learn a new technology because everyone is telling you to, but why? What benefit does it actually bring?

Starting with 'why?'

I'd like to start the beginning of this series by starting with one question: "Why?". Let's forget about what technologies like Docker and Kubernetes actually do for a moment and briefly explore why the software industry has moved to use them. If you don't really care or want to get stuck in, jump to the First steps with Docker section.

Packaging and deploying applications has often been really messy.

In general there have been two primary problems that have been present since the start of software development that have gradually got worse as more and more technologies have emerged.

1) How do you package an application?

With all the different programming languages, frameworks, dependencies and platforms available to developers, packaging up applications and using them somewhere can become a very messy, manual process. Take the example of a simple PHP web application. If you want to deploy this on a server and expose it to the world, you're going to not only have to have a server with the correct version of PHP installed on it, but you'll need to have an HTTP server like Apache too, as well as any dependencies that your application may use. And all of that will be done on a machine with an Operating System like Windows, Linux or Macintosh.

You can see how many variations there already are in this example of just one application. How are all these layers configured? What are their versions? What happens if you have two different servers configured slightly differently? How do you even test this remote setup locally? And all of this is exacerbated when you remember that you've also likely got to install a database like MySQL, a key-value store like Redis, load balancers, monitoring, data loggers, backup schedulers, certificate provisioners... the list goes on and on.

The more applications you have the more orders of magnitude of configuration become available and it will be crippling. Over time, it becomes increasingly more difficult to package software and deploy it to different environments, with developers completely unable to build their applications in an environment resembling production, and operations teams scrambling to implement any new requirements developers may have, on an array of varied machines. Solutions like VMware have gone part way in helping to homogenise production environments but very few technologies have gone the full hog. Player 1 has entered the game: Docker.

2) How do you schedule and coordinate multiple applications?

The next problem arises after you've deployed your application successfully. How do you coordinate (orchestrate - if you want to sound technical) potentially hundreds of different applications and how do we ensure that they're readily available and exposed to each other? There are actually a lot of cross overs from the first problem in that it becomes difficult for developers to have interdependent applications on their local system and essentially impossible to have them in a similar configuration to production. And on top of that, how do we guarantee that all our applications are up and running? What happens if an application goes down? What about all the applications it relies on?

And to make matters worse, when it comes to deployment, coordinating multiple applications can be a nightmare if you need different apps to be updated across your cluster in tandem. If you've been following the DevOps movement over the past 10 years, one of the key issues it sets out to solve is high risk, infrequent deployments. Being unable to easily schedule and deploy a multitude of applications across a clusters of servers is the primary contributor of deployments that blow up. It's not good for business when deployments blow up.

So how do we solve this problem? How do we ensure that all our applications are available to talk to each other, rescheduled when they go down and easy to deploy when we have updates? Player 2 has entered the game: Kubernetes.

First steps with Docker

I'm going to cheat a little here. Rather than get you started with Docker in its raw form, I'm going to get you started by introducing you to Docker Compose. You can think of Docker Compose as a very watered down Kubernetes. While Docker allows us to package our applications, it doesn't do much for actually running them. You can of course run Docker containers using nothing but Docker itself, but setting them up requires a number of terminal commands that are too easily forgotten. Instead, Docker Compose allows us to 'orchestrate' (I use the term loosely) simple collections of Dockerised apps in just one file. This means you can commit your configurations to your repository and store it for other people to run with one command.

Step 1) Install Docker Desktop

First things first, on your development machine head to Docker's official site and download Docker Desktop for your computer. This install will include everything we're going to be using throughout this series, including Docker, Docker Compose and Kubernetes. Once running, you should see Docker's whale icon in your application tray/menu bar.

Step 2) Prepare for Docker Compose

As promised, our first foray into this new world will not be with Docker but with Docker Compose. I'm going to be copying a lot of the following straight from the documentation that Docker provide. I'm doing this because at this stage I just want to demonstrate the power of what's going on, rather than the details of how it's working. You can read what's going in more detail, here: https://docs.docker.com/compose/wordpress/

First, create a folder somewhere on your drive and create a file named docker-compose.yaml. Open the file in your favourite text editor and paste in the following. Don't worry about what it all means, you can explore that later. For now just stick with me. This file defines a series of rules that outline how two Docker images (one is Wordpress and the other is MySQL) can be run together. What's incredible is that this one file will be all we need to run the whole application, database and all. You're not installing XAMPP/MAMP or setting anything up other than Docker.

version: '3.3'

services:

db:

image: mysql:5.7

volumes:

- db_data:/var/lib/mysql

restart: always

environment:

MYSQL_ROOT_PASSWORD: somewordpress

MYSQL_DATABASE: wordpress

MYSQL_USER: wordpress

MYSQL_PASSWORD: wordpress

wordpress:

depends_on:

- db

image: wordpress:latest

ports:

- "8000:80"

restart: always

environment:

WORDPRESS_DB_HOST: db:3306

WORDPRESS_DB_USER: wordpress

WORDPRESS_DB_PASSWORD: wordpress

WORDPRESS_DB_NAME: wordpress

volumes:

db_data: {}

Once you've created your file, open a terminal in the directory with your docker-compose.yaml file, and type:

docker-compose up

Your terminal will do its own thing. Hang in there. You may see references to 'images' being 'pulled' and that's important because what you're really doing here is pulling in a series of public Docker images, held in public repositories, and booting them up. The images contain all the dependencies for each of their own applications including the operating system that they run on.

When you see something similar to the following line, you're done. If you don't see something similar to that line, when the terminal has stopped doing its thing, then you're done.

wordpress_1 | [Sun Feb 02 12:03:56.445622 2020] [core:notice] [pid 1] AH00094: Command line: 'apache2 -D FOREGROUND'`

Behold!

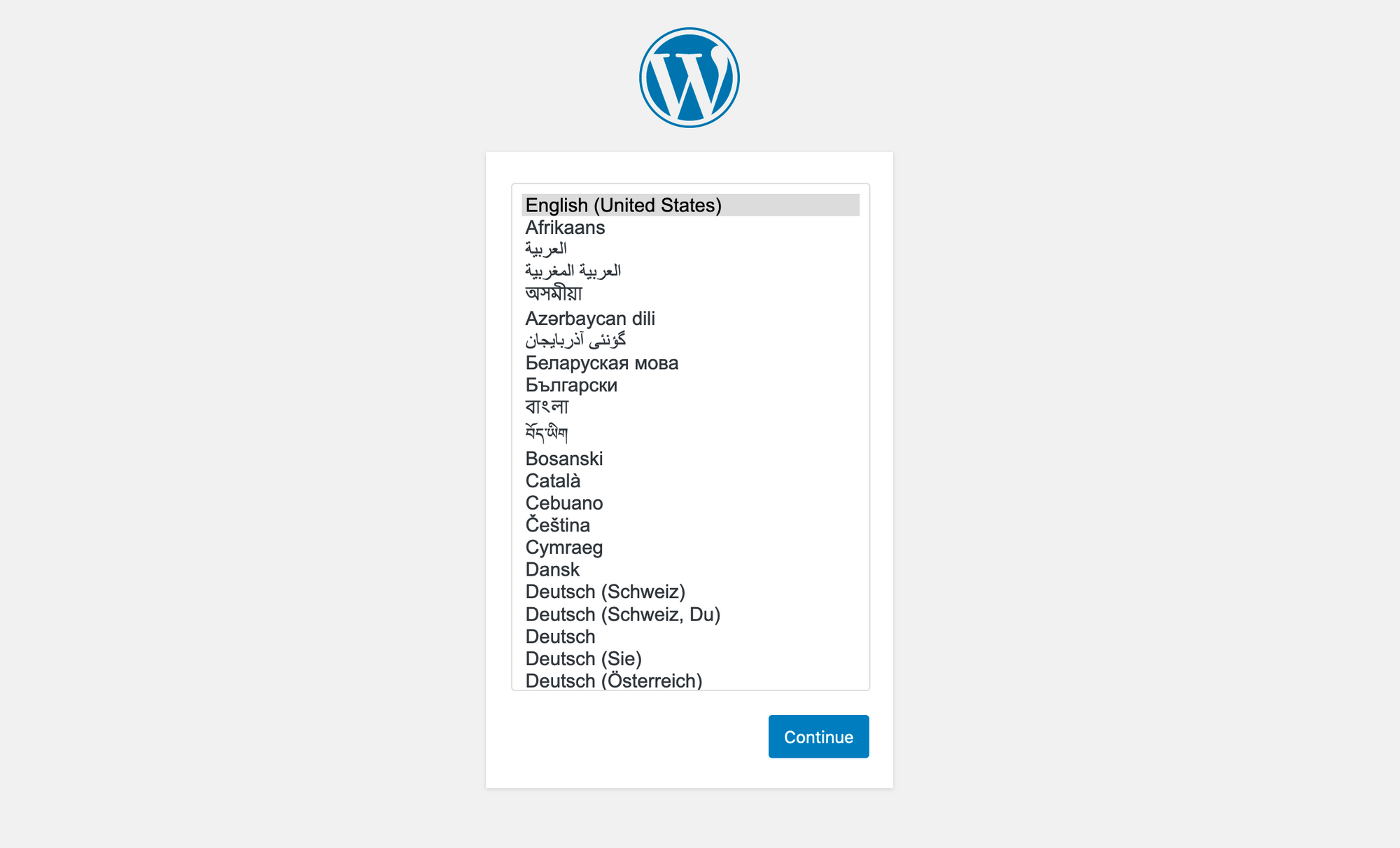

In your web browser go ahead and type in the URL: http://localhost:8000.

You should see something like this:

To be honest, if you're seeing this screen, then already we've achieved all that I wanted to in this tutorial. You're more than welcome to go ahead and set up Wordpress, but the really important thing is that with just one small .yaml file, you've got a PHP application and a MySQL DB working together. It didn't matter what OS you were on, it didn't matter what type of application it was or what technologies it used, all that mattered was that you had Docker installed and everything else was taken care of. All the dependencies, the application code itself and the configuration of it, all handled by Docker Images that we've referenced in our .yaml file.

Hopefully you can begin to see how Docker provides an incredibly powerful and convenient way of packaging your applications. This is our first goal in this series, to package our application so when it comes to deployment we can just deploy our Docker image with next to zero set up and have the application running in production exactly as it does in development, in a container.

Why don't you look around the web for more example Docker Compose configurations and see what other cool applications you can run with one command.

To continue this journey, check out part 2 of this series where we get into the nitty gritty of Dockerising (I'm happy with this new transitive verb if you are) applications and the principles behind doing so.